NEWYou can now listen to Fox News articles!

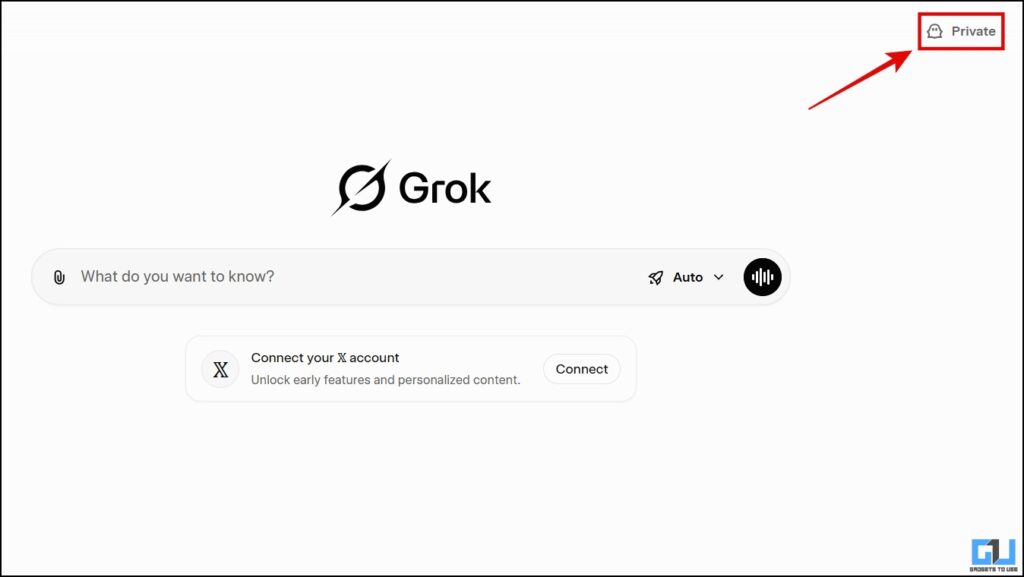

ChatGPT has quietly changed how it works. It is no longer limited to answering questions or writing text. With built-in apps, ChatGPT can now connect to real services you already use and help you get things done faster. Instead of bouncing between tabs and apps, you can stay in one conversation while ChatGPT builds playlists, designs graphics, plans trips or helps you make everyday decisions. It feels less like searching and more like having a digital assistant that understands what you want.

That convenience comes with responsibility. When you connect apps, take a moment to review permissions and disconnect access you no longer need. Used the right way, ChatGPT apps save time without giving up control. Here are the five best ChatGPT apps and how to start using them today.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM newsletter

THIRD-PARTY BREACH EXPOSES CHATGPT ACCOUNT DETAILS

ChatGPT apps now let users connect everyday services like music, travel and design tools directly inside one conversation, changing how people get things done. (Philip Dulian/picture alliance via Getty Images)

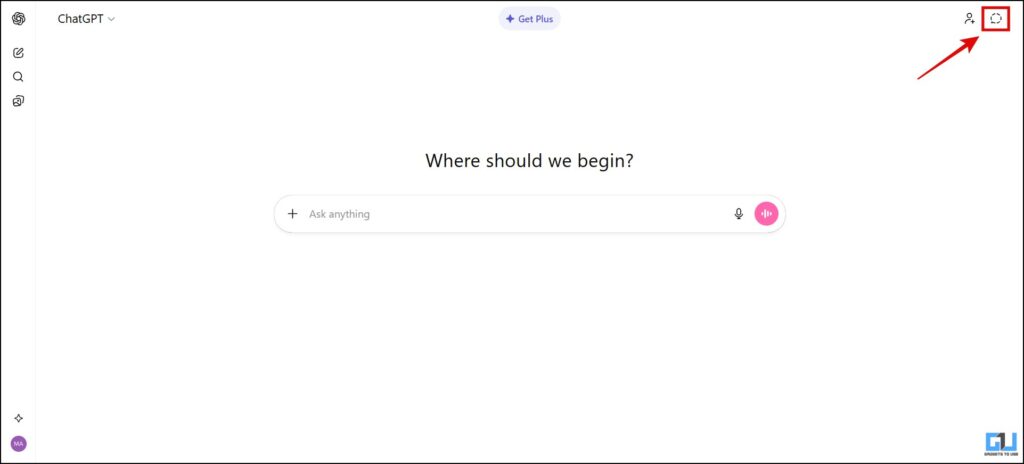

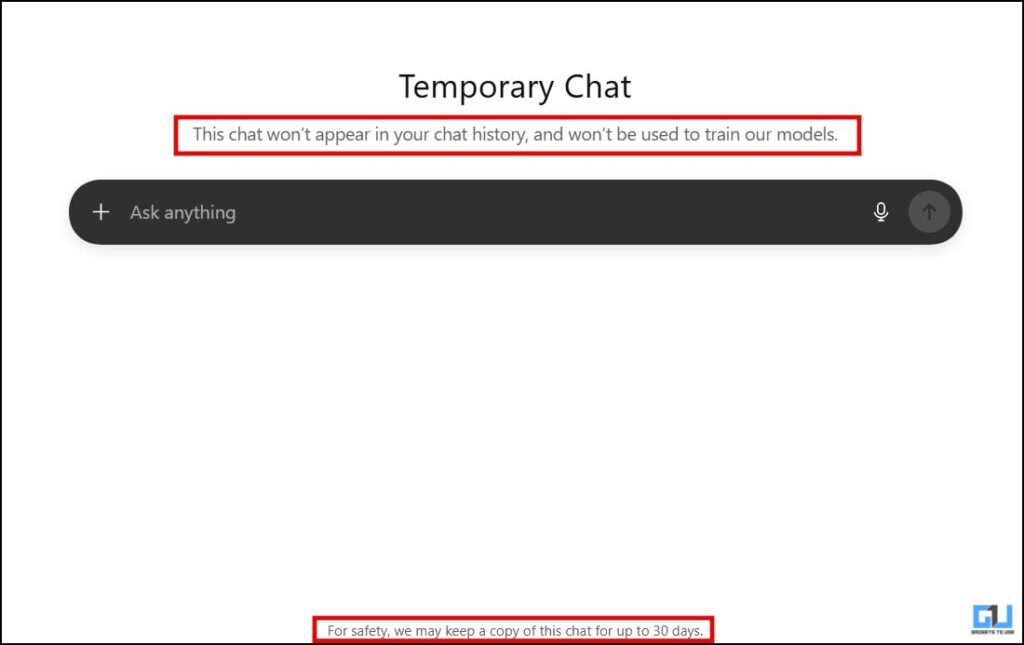

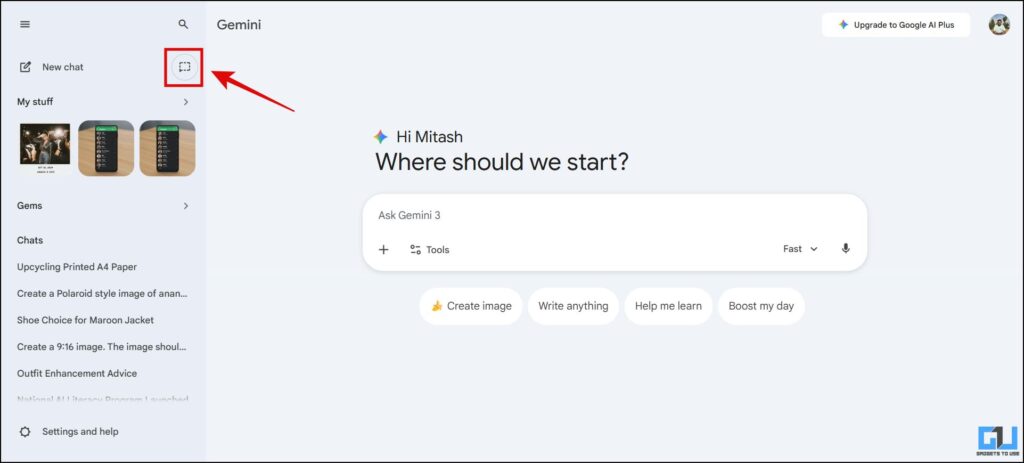

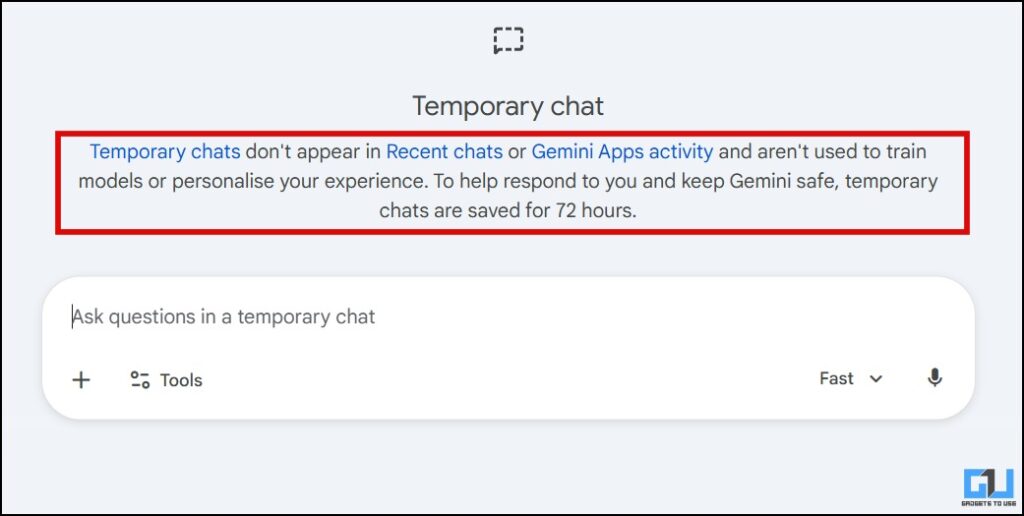

How to start using apps inside ChatGPT

If you have not used ChatGPT apps before, getting started is simple. You do not need any technical setup or extra downloads. Apps appear as tools you can enable inside a conversation.

App availability and placement may vary by device, model and region.

iPhone and Android

- Open the ChatGPT app and sign in

- Start a new chat

- Tap the plus (+) or tools icon near the message box

- Review the available tools or apps shown

- Select the app you want to use

- Follow the on-screen prompt to connect your account if needed

- Start asking ChatGPT to use the app naturally

Mac and PC

- Open ChatGPT in your browser or desktop app and sign in

- Start a new chat

- Look for available tools or apps in the chat interface

- Select the app you want to use

- Follow the on-screen prompt to connect your account if required

- Start asking ChatGPT to use the app

Once an app is connected, you can speak naturally. For example, you can ask ChatGPT to create a playlist, design a graphic or help plan a trip.

1. Apple Music

Apple Music is now available as an app inside ChatGPT, and it changes how people discover and organize music. Instead of scrolling through endless playlists, you can ask ChatGPT to create one using natural language. For example, you can request a holiday mix without overplayed songs or ask it to find a track you only half remember. ChatGPT searches Apple Music and builds the playlist for you. This integration does not stream full songs inside ChatGPT. It helps Apple Music subscribers find music, create playlists and discover artists faster, then links back to Apple Music for listening. ChatGPT can also activate Apple Music automatically based on your request, so you do not always need to select the app first.

Note: Apple Music requires an active subscription.

Why it stands out

It turns music discovery into a simple conversation instead of a search.

2. Canva

Canva’s ChatGPT app helps you turn ideas into visuals fast. You can describe what you want in plain language, and ChatGPT helps generate layouts, captions and design ideas that open directly in Canva. This works well for featured images, social posts and simple marketing graphics.

Why it stands out: You move from idea to design without starting from scratch.

3. Expedia

The Expedia app inside ChatGPT simplifies travel planning. You can ask for flight options, hotel ideas and destination tips in one conversation. ChatGPT also explains tradeoffs so you understand why one option may be better than another.

Why it stands out: It turns scattered travel research into a clear plan.

4. TripAdvisor

TripAdvisor inside ChatGPT helps you plan trips with real traveler insight, not just search results. You can ask for hotel recommendations, top attractions and things to do based on your travel style. ChatGPT pulls in reviews and rankings, then helps narrow choices so you are not overwhelmed. It works especially well when you want honest opinions before booking or planning a full itinerary.

Why it stands out: It combines real reviews with conversational guidance to make travel decisions easier.

5. OpenTable

OpenTable inside ChatGPT removes the guesswork from dining decisions. You can ask for restaurant recommendations by location, cuisine and vibe. From there, ChatGPT helps narrow choices and links you directly to reservations through OpenTable.

Why it stands out: It saves time when choosing where to eat, especially on busy nights.

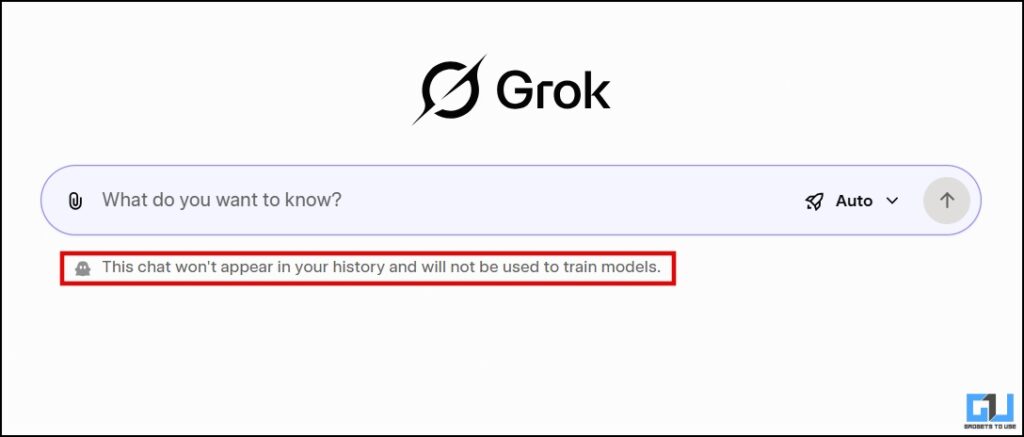

How to disconnect apps from ChatGPT

If you no longer use an app connected to ChatGPT, you can disconnect it at any time. Removing access helps limit data sharing and keeps your account more secure.

iPhone and Android

- Open the ChatGPT app and sign in

- Tap the menu icon

- Click your profile icon

- Scroll down and tap Apps (It might say Connected apps, Tools or Integrations)

- Select the app you want to remove

- Tap Disconnect or Remove access

- Confirm your choice if asked to

Mac and PC

- Open ChatGPT in your browser or desktop app and sign in

- Click your profile icon

- Select Settings

- Open Apps (It might say Connected apps, Tools or Integrations)

- Choose the app you want to disconnect from

- Click Disconnect or Remove access

- Confirm the change if asked to

Once disconnected, ChatGPT will no longer access that app. You can reconnect later if you decide to use it again.

MALICIOUS BROWSER EXTENSIONS HIT 4.3M USERS

Built-in apps turn ChatGPT from a chatbot into a digital assistant that can plan trips, build playlists and help make decisions faster. (Nikolas Kokovlis/NurPhoto via Getty Images)

A quick privacy checklist for ChatGPT users

Using apps inside ChatGPT is convenient, but it is smart to review your settings from time to time. This checklist helps you reduce risk while still enjoying the features.

1) Review connected apps regularly and remove ones you no longer use

Connected apps can access limited account data while active. If you stop using an app, disconnect it. Fewer connections reduce your overall exposure and make account reviews easier.

2) Only connect apps you trust and recognize

Stick to well-known apps from established companies. If an app name looks unfamiliar or feels rushed, skip it. When in doubt, research the app before connecting it to your ChatGPT account.

3) Check account permissions after major app updates

Apps can change how they work after updates. Take a moment to review permissions if an app adds new features or requests additional access. This habit helps you spot changes early.

4) Avoid sharing sensitive personal or financial details in chats

Even trusted tools do not need your Social Security number, bank details or passwords. Keep chats focused on tasks and ideas. Treat ChatGPT like a public workspace, not a private vault.

5) Use a strong, unique password for your ChatGPT account

Your ChatGPT password should not match any other account. A password manager can help generate and store strong credentials. This step alone blocks many common attacks. Consider using a password manager, which securely stores and generates complex passwords, reducing the risk of password reuse.

Next, see if your email has been exposed in past breaches. Our #1 password manager (see Cyberguy.com) pick includes a built-in breach scanner that checks whether your email address or passwords have appeared in known leaks. If you discover a match, immediately change any reused passwords and secure those accounts with new, unique credentials.

Check out the best expert-reviewed password managers of 2025 at Cyberguy.com.

6) Turn on two-factor verification if available

Two-factor verification (2FA) adds a second layer of protection. Even if someone gets your password, they still cannot access your account without the extra code. Enable it whenever possible.

REAL APPLE SUPPORT EMAILS USED IN NEW PHISHING SCAM

Connecting apps inside ChatGPT saves time, but users should regularly review permissions and disconnect tools they no longer need. (Jakub Porzycki/NurPhoto via Getty Images)

7) Use strong antivirus software on all your devices

Antivirus software protects against malicious links, fake downloads and harmful browser extensions. Keep it updated and allow real-time protection to run in the background. Strong antivirus software helps protect against fake ChatGPT links, lookalike apps and malicious extensions designed to steal login details. Choose a trusted provider and keep automatic updates turned on.

The best way to safeguard yourself from malicious links that install malware, potentially accessing your private information, is to have strong antivirus software installed on all your devices. This protection can also alert you to phishing emails and ransomware scams, keeping your personal information and digital assets safe.

Get my picks for the best 2025 antivirus protection winners for your Windows, Mac, Android & iOS devices at Cyberguy.com

8) Watch for fake ChatGPT links and scam downloads

Scammers often create fake ChatGPT downloads and lookalike offers. Always access ChatGPT through its official app or website. Never enter your login details through links sent by email or text.

Take my quiz: How safe is your online security?

Think your devices and data are truly protected? Take this quick quiz to see where your digital habits stand. From passwords to Wi-Fi settings, you’ll get a personalized breakdown of what you’re doing right and what needs improvement. Take my Quiz here: Cyberguy.com

CLICK HERE TO DOWNLOAD THE FOX NEWS APP

Kurt’s key takeaways

ChatGPT is becoming a central hub for everyday tasks. With apps like Apple Music, Canva, Expedia, TripAdvisor and OpenTable, you can plan, create and decide without jumping between multiple platforms. That shift saves time and cuts down on friction. It also makes technology feel more helpful and less overwhelming. The best ChatGPT apps solve real problems, from discovering music to planning trips and choosing where to eat. As more apps roll out, ChatGPT will feel less like a chatbot and more like a true digital assistant. Just remember to stay smart, review connected apps and watch for scams.

If ChatGPT could replace three apps you use every day, which ones would you choose and why? Let us know by writing to us at Cyberguy.com

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM newsletter

Copyright 2025 CyberGuy.com. All rights reserved.

Kurt “CyberGuy” Knutsson is an award-winning tech journalist who has a deep love of technology, gear and gadgets that make life better with his contributions for Fox News & FOX Business beginning mornings on “FOX & Friends.” Got a tech question? Get Kurt’s free CyberGuy Newsletter, share your voice, a story idea or comment at CyberGuy.com.