In 2021, Israel used “the Gospel” for the first time. That was the codename for an AI tool deployed in the 11-day war against Gaza that the IDF has since deemed the first artificial intelligence war. The conclusion of that war didn’t end the conflict between Israel and Palestine, but it was a sign of things to come.

The Gospel rapidly spews out a mounting list of potential buildings to target in military strikes by reviewing data from surveillance, satellite imagery, and social networks. That was four years ago, and the field of artificial intelligence has since experienced one of the most rapid periods of advancement in the history of technology.

Marking two years on Tuesday, Israel’s latest offensive on Gaza has been called an “AI Human Laboratory” where the weapons of the future are tested on live subjects.

Over the last two years, the conflict has claimed the lives of more than 67,000 Palestinians, upwards of 20,000 of whom were children. As of March 2025, more than 1,200 families were completely wiped out, according to a Reuters examination. Since October 2024, the number of casualties provided by the Palestinian Ministry of Health has only included identified bodies, so the real death toll is likely even higher.

Israel’s actions in Gaza amount to a genocide, a UN Commission concluded last month.

Hamas and Israel agreed to the first phase of a ceasefire deal that was announced on Wednesday, but Israeli strikes on Gaza were still continuing as of Thursday morning, according to Reuters. The agreed-upon plan involves the release of Israeli hostages by Hamas in exchange for 1,950 Palestinians taken by Israel and the long-awaited aid convoys. But it does not involve the creation of a Palestinian state, which Israel strictly opposes. On Friday afternoon, Israel said that the ceasefire agreement is now in effect, and President Trump has said there will be a hostage release next week. There have been at least three ceasefire agreements since October 7, 2023.

Aiding Israel’s destruction in Gaza is an unprecedented reliance on artificial intelligence that is, at least partially, supplied by American tech giants. Israel’s use of AI in surveillance and wartime decisions has been documented and criticized time and again by various media and advocacy organizations over the years.

“AI systems, and generative AI models in particular, are notoriously flawed with high error rates for any application that requires precision, accuracy, and safety-criticality,” Dr. Heidy Khlaaf, chief AI scientist at the AI Now Institute, told Gizmodo. “AI outputs are not facts; they’re predictions. The stakes are higher in the case of military activity, as you’re now dealing with lethal targeting that impacts the life and death of individuals.”

AI that generates kill lists

Although Israel has not disclosed its intelligence software fully and denied some of the AI usage claims, numerous media and non-profit investigations paint a different picture.

Also used in Israel’s 2021 campaign were two other programs called “Alchemist,” which sends real-time alerts for “suspicious movement,” and “Depth of Wisdom” to map out Gaza’s tunnel network. Both are reportedly in use this time around, as well.

On top of the three programs Israel has previously openly owned up to using, the IDF also utilizes Lavender, an AI system that essentially generates a kill list of Palestinians. The AI calculates a percentage score for how likely a Palestinian is to be a member of a militant group. If the score is high, the person becomes the target of missile attacks.

According to a report from Israeli magazine +972, the army “almost completely relied” on the system at least in the early weeks of the war, with full knowledge of the fact that it misidentified civilians as terrorists.

The IDF required officers to approve any of the recommendations made by the AI systems, but according to +972, that approval process just checked whether or not the target was male.

Many other AI systems that are in use by the IDF are still in the shadows. One of the few programs also unveiled is “Where’s Daddy?” which was built to strike targets inside their family homes, according to +972.

“The IDF bombed [Hamas operatives] in homes without hesitation, as a first option. It’s much easier to bomb a family’s home. The system is built to look for them in these situations,” an anonymous Israeli intelligence officer told +972.

AI in surveillance

The Israeli army also uses AI in its mass surveillance efforts. Yossi Sariel, who led the IDF’s surveillance unit until late last year when he resigned, citing failure to prevent the Oct 7. Hamas attack, spent a sabbatical year training at a Pentagon-funded defense institution in Washington, D.C., where he shared radical visions of AI on the battlefield, according to a professor at the institute who spoke to the Washington Post last year.

A Guardian report from August found that Israel was storing and processing mobile phone calls made by Palestinians via Microsoft’s Azure Cloud Platform. After months of protests, Microsoft announced last month that it is cutting off access to some of its services provided to an IDF unit after an internal review found evidence that supported some of the claims in the Guardian article.

Microsoft denies prior knowledge, but the Guardian report paints a different picture. Microsoft CEO Satya Nadella met with IDF’s spying operations head Sariel in late 2021 to discuss hosting intelligence material on the Microsoft cloud, the Guardian reported.

“The vast majority of Microsoft’s contract with the Israeli military remains intact,” Hossam Nasr, an organizer with No Azure for Apartheid and a former Microsoft worker, told Gizmodo last month.

When asked for comment, Microsoft directed Gizmodo to a previous statement the tech giant made on the ongoing internal investigation into how its products are used by Israel’s Ministry of Defense.

On top of storing and combing through data, AI was used in translating and transcribing the gathered surveillance. But an internal Israeli audit, according to the Washington Post, found that some of the AI models that the IDF used to translate communications from Arabic had inaccuracies.

An Associated Press investigation from earlier this year found that advanced AI models by OpenAI, purchased via Microsoft’s Azure, were used to transcribe and translate the intercepted communications. The investigation also found that the Israeli military’s use of OpenAI and Microsoft technology skyrocketed after Oct 7, 2023.

AI-driven surveillance efforts don’t just target residents of Gaza and the West Bank, but they have also been used against pro-Palestinian protestors in the United States. An Amnesty International report from August found that AI products by American companies like Palantir were used by the Department of Homeland Security to target non-citizens who speak out for Palestinian rights.

“Palantir has had federal contracts with DHS for fourteen years. DHS’s current engagement with Palantir is through Immigration and Customs Enforcement, where the company provides solutions for investigative case management and enforcement operations,” a DHS spokesperson told Gizmodo. “At the Department level, DHS looks holistically at technology and data solutions that can meet operational and mission demands.”

Palantir has not yet responded to a request for comment.

AI-driven accusations

The proliferation of AI-generated video and images has done more than just flood the internet with slop. It has also caused widespread confusion for social media users over just what’s real and what’s fake. The confusion is understandable, but it has been co-opted to discredit the voices of the oppressed. In this case, too, Gazans have been at the receiving end of the attacks.

The videos and photos coming out of Gaza are referred to in Israel as “Gazawood”, with many claiming that the images are staged or completely AI-generated. Since Israel has not allowed foreign journalists into Gaza and not only discredits but also disproportionately targets the enclave’s journalists in air strikes, the truth becomes harder to validate.

In one instance, Saeed Ismail, a real 22-year-old Gazan who had been raising money online to feed his family, was accused of being AI-generated due to misspelled words on his blanket featured in one video. Gizmodo verified his existence in July.

American big tech is leading the way

While Israeli tech startups find a sizable market in the U.S. and deals with government agencies like ICE, the relationship goes both ways.

It’s tough to precisely map out which American companies have fed the technology used to target and kill Palestinians. But what is available is which Big Tech companies proudly partner with the Israeli army. And the answer to that question is almost all of them.

Microsoft has received much of the recent attention from activists, but Google, Amazon, and Palantir are considered some of the other top American third-party vendors for the IDF.

Google and Amazon employees have been protesting for years over “Project Nimbus,” a $1.2 billion contract signed in 2021 that tasks the American tech giants with providing cloud computing and AI services to the Israeli military.

Amazon suspended an engineer last month for emailing the CEO, Andy Jassy, about the project and speaking out against it in company Slack channels.

Although Google has also clamped down on employee criticism, when the deal was signed in 2021, Google officials themselves raised concerns that the cloud services could be used for human rights violations against Palestinians, according to a 2024 New York Times report.

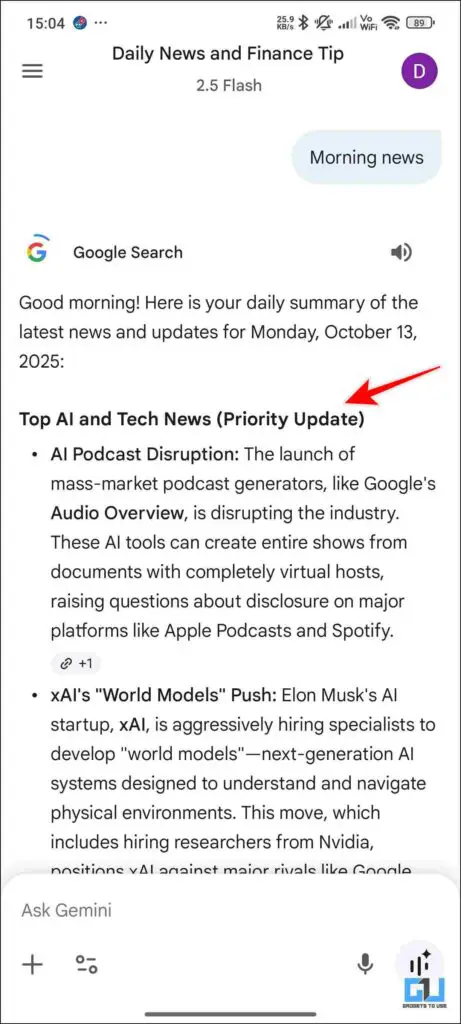

The Israeli military also requested access to Google’s Gemini as recently as last November, according to a Washington Post report.

Palantir, which offers software like the Artificial Intelligence Platform (AIP) that analyzes enemy targets and proposes battle plans, agreed to a strategic partnership with the IDF to supply its technology to “the current situation in Israel,” Palantir executive vice president Josh Harris told Bloomberg last year.

Palantir has been under fire globally for its partnership with the Israeli army. Late last year, a major Norwegian investor sold all of its Palantir holdings due to concerns of international human rights law violations. The investing company said that an analysis indicated that Palantir aided an AI-based IDF system that ranked Palestinians based on the likelihood to launch “lone wolf terrorist” attacks, which then led to preemptive arrests.

CEO Alex Karp has stood behind the company’s decision to back Israel in its war against Gazans many times.

The IDF has also inked data center deals with Cisco and Dell, and a cloud computing deal with independent IBM subsidiary Red Hat.

“IBM holds human rights and freedoms in the highest regard, and we are deeply committed to conducting our business with integrity, guided by our robust ethical standards,” IBM told Gizmodo. “As for the UN report, most of its claims are inaccurate and should not be treated as fact.”

Cisco, Dell, Google, Amazon, and OpenAI did not respond to a request for comment.

In August, the Washington Post unveiled a 38-page alleged plan for Gaza to become a U.S.-operated tech hub.

Called the Gaza, Reconstitution, Economic Acceleration and Transformation Trust (or GREAT), the plan involves “temporarily relocating” the remaining two million or so Palestinians to build six to eight AI-powered smart cities, regional data centers to serve Israel, and something called “The Elon Musk Smart Manufacturing Zone.” The plan would convert Gaza into a “trusteeship” administered by the U.S. for at least 10 years.

Future of AI warfare and surveillance

AI companies want in on the battlefield.

There is a huge demand by militaries around the globe for the AI systems provided by tech giants. America is pouring out millions of dollars to integrate AI systems into military decision-making, like identifying strike targets as part of its Thunderforge program. Chinese leader Xi Jinping has also reportedly made military artificial intelligence a top strategic priority.

As the technology is still in its growing phase, the active war zones and the civilians living there become test subjects for AI-powered killing machines. Similar to Gaza, Ukraine has also been described as a real-time testing ground for AI-powered military technology. In that case, though, the Ukrainian government themselves are also on board with it.

Over the summer, the Ukrainian military announced “Test in Ukraine,” a scheme that invites foreign arms companies to test out their latest weapons on the front lines of the Russia-Ukraine war.

On top of its abundant deals with the Israeli army, Palantir is also very popular with the American Department of Defense. The company inked a $10 billion software and data contract with the U.S. Army in August.

One could argue that profit will always override every other incentive, but even Palantir drew a line recently when asked to participate in a controversial UK digital identification program, arguing that the program needed to be “decided at the ballot box,” according to the Times.

We’ve seen tech companies back away from military projects, like Project Maven, in the past when they felt the cultural winds blowing against them. For now, the Trump administration wants Americans leading the way on the AI battlefield. While external criticism and internal pressure from employees still exist at the biggest AI firms, they currently have a plausible argument that this is what the American people voted for. Until that changes, the gold rush for military funds will persist.