Robots are not, yet, autonomous killing machines. Even the rule briefly considered in San Francisco, would have kept a human operator in the decision loop.

Still, police departments globally are using or looking towards artificial intelligence (AI) to bolster some existing systems. Some robots, such as Xavier, the autonomous wheeled vehicle used in Singapore, are primarily used for surveillance, but nevertheless use “deep convolutional neural networks and software logics” to process images of infractions.

In the United States alone, some 1,172 police departments are now using drones. Given rapid advances in AI, it’s likely that more of these systems will have greater autonomous capabilities and be used to provide faster analysis in crisis situations.

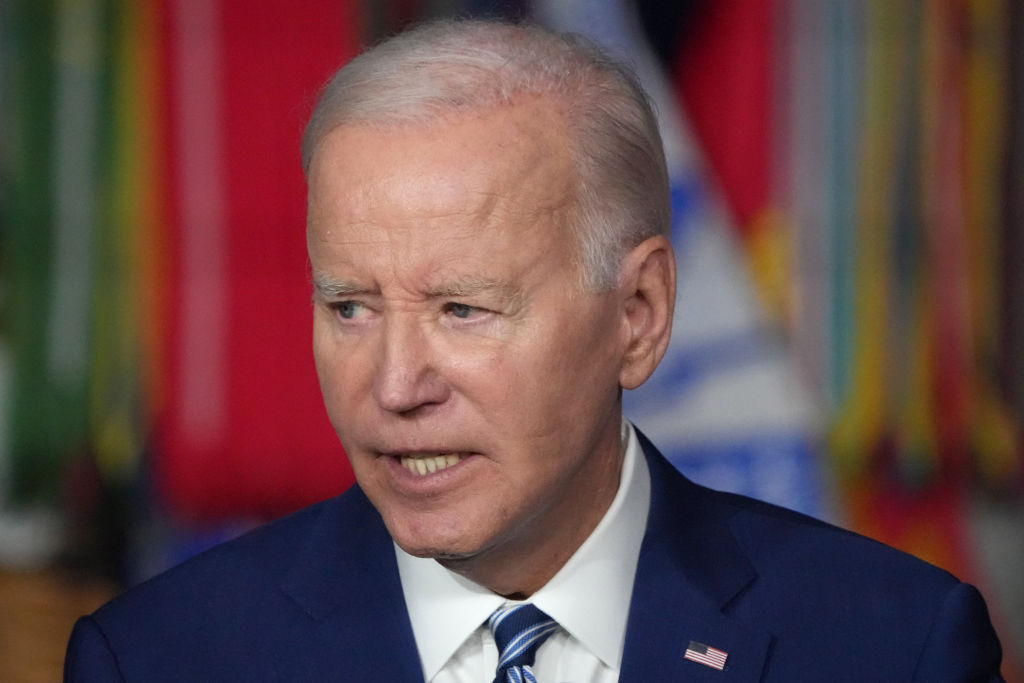

STR/AFP via Getty Images

While most of these robots are unarmed, many can be weaponized. Industry approach varies. Axon, the maker of the Taser electroshock weapon, proposed equipping drones with Tasers and cameras. The company ultimately decided to reverse itself, but not before most of its AI Ethics board resigned in protest. Boston Dynamics recently published an open letter stating it will not weaponize its robots. Other manufacturers, such as Ghost Robotics, allow customers to mount guns on its machines.

Even companies opposed to the weaponization of their robots and systems can only do so much. It’s ultimately up to policy makers at the local, national, and international levels to try to prevent the most egregious weaponization. For now, the police are using the language of limited and emergency circumstances, but this opening of Pandora’s box is going to have wider consequences for policing and society.

The promises that these robots and technologies will only be used in limited circumstances are not reassuring. As Elizabeth Joh, professor of law at the University of California, rightly points out, the current discussion regarding the use of killer robots when police face an imminent threat is too broad of an interpretation.

Too often have technologies that were given to the police or border services agencies for extreme situations been used to respond to protests or minor infractions. In addition, international discussion on military killer robots may feel removed from everyday policing, but technologies developed for battlefields, such as the aerial surveillance system used in Iraq, have found themselves used in American cities.

The appeal of using robots for policing and warfare is obvious: robots can be used for repetitive or dangerous tasks. Defending the police use of killer robots, Rich Lowry, editor-in-chief of National Review and a contributing writer with Politico Magazine, posits that critics have been influenced by dystopic sci-fi scenarios and are all too willing to send others into harm’s way.

This argument echoes one heard in international fora on this question, which is that such systems could save soldiers’ and even civilians’ lives. But what Lowry and other proponents of lethal robots overlook are the wider impacts on particular communities, such as racialized ones, and in developing countries. Avoiding the slippery slope of escalation when allowing the technology in certain circumstances is a critical challenge.

The net result is that already over-policed communities, such as Black and brown ones, face the prospect of being further surveilled by robotic systems. Saving police officers’ lives is important, to be sure. But is the deployment of killer robots the only way to reduce the risks faced by front-line officers? What about the risks of accidents and errors? And there’s the fundamental question of whether we want to live in societies with swarms of robots or drones patrolling our streets.

Technological advancements may well have their place in policing. But killer robots are not the answer. They would take us down a dystopian path that most citizens of democracies would much rather avoid. This is not science fiction, but rather the reality if policies do not keep up with technological advancement.

Branka Marijan is a researcher at Project Ploughshares, expert in military and security implications of emerging technologies, and a contributor to the Centre for International Governance Innovation.

The views expressed in this article are the writer’s own.